Development

Workflow

First, I’d like to address elements of my Max workflow. In my patch I utilised many send (‘s’ or ‘s~’) and receive (‘r’ or ‘r~’) objects. Application of these objects allow data or audio signal to be sent from object to object without the use of a patch cable, which potentially could be quite extensive. Consequently, send and receive objects prevent my patch from appearing convoluted, making it straightforward to follow when trouble shooting and changing elements.

Another key aspect of my patching workflow is the application of subpatches. This permits me to place sections of my patch into its own subpatch by either encapsulating a group of objects (using ‘command, shift and E’) or employing the patcher object which creates a new subpatch to commence patching in. This provides the ability to keep parts of the patch that aren’t necessary to be constantly visible hidden and at the point I need to view it I can open the subpatch. Furthermore, the application of subpatches reduces the quantity of objects and patch cables displayed, maximising my real estate in the patch. Subpatches poses the ability to have inlet and outlet objects which allow for the connection of objects outside of the subpatch to objects inside the subpatch. In the inspector tab you can write a description on inlets and outlets ensuring you are aware of what is being patched into and out of the subpatch. This contributes towards the patch being uncomplicated to follow.

Poly~

True Polyphony refers to ‘an instrument with the means to produce multiple notes simultaneously and that each note has independent envelope control’ (Pejrolo and Metcalfe, 2017, p.17). This is in opposition to Monophonic, referring to instruments with the capability to only play one note at a time. Analog Polyphonic synthesisers have been present since the late 1930s but gained mass popularity in the mid to late-1970s with instruments such as the Prophet 5 (Morgan, 2022).

In order for my synthesiser to generate polyphony, I had to adopt usage of the poly~ object which facilitates several voices of polyphony. For my synthesiser, I specified that up to 16 voices could be played at the same time. Poly~ requires a separate Max patch to be created and then said Max patch is specified in the object followed by the number of voices, for example, ‘poly~ synthesiser_voice 16’. This Max patch needs to be placed in the same folder as the main patch thus allowing Max to easily find it. For ease I’ll refer to the patch specified in poly~ as the ‘voice patch’ and the patch containing everything else the ‘main patch’.

The voice patch requires in objects to connect objects from the main patch into the voice patch, such as MIDI and user interface control objects. These objects are numbered (e.g., ‘in 1’, ‘in 2’, or ‘in~ 1’ if dealing with audio signal) and in the inspector tab, you can add a description. As a result, when patching into the poly~ object it will display the description when you hover over the input, in the same way as inlet objects inside subpatches. This is essential for keeping track of what is patched into the poly~ object. This is also true of output objects (e.g., ‘out~ 1’).

Inside the voice patch, I purposed various send (‘s’ or ‘s~’) and receive (‘r’ or ‘r~’) objects. Inside poly~ it is essential to include the phrase ‘#0_’ prior to the name of the send or receive (e.g., ‘#0_s MIDI’) (Elandres, 2008). This is because if the device were to be used on multiple tracks in Ableton, it would need to specify the send and receive by replacing the ‘#0’ with a number so as to ensure it doesn’t send the information to another track using the same device.

Basic Shapes

Early analog synthesisers provided four waveforms for the user with the belief ‘they offered a good variety of choice for the subtractive synthesis process (Hass, 2021). Subtractive synthesis refers to synthesis ‘based on the alteration of specific frequencies with a filter’ (Hutchinson, 2020). Note the presence of a filter in the output section of the synthesiser.

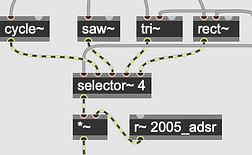

Firstly, I wanted to incorporate the four basic shapes waveforms included in the majority of synthesisers. Max 8 includes an object for each of these waveforms; cycle~ for a sine wave, tri~ for a triangle wave, saw~ for a saw wave and rect~ for a square wave. I incorporated two oscillators that could independently select any of these waveforms by way of a menu user interface object (live.menu). The aforementioned menu object consigns a message to a selector~ object which dictates the waveform that will be outputted. Subsequently, the outputted waveform is multiplied (*~) with an adsr~ object which interacts with dial objects (live.dial) for attack, decay, sustain and release parameters to employ a usual attack, decay, sustain and release envelope on the oscillators.

Additive Synthesis

Additive Synthesis can be defined as a type of synthesis in which combinations of ‘simple waveforms at different frequencies, amplitudes, and phase create complex waveforms’ (Hutchinson, 2020). Additive synthesis is made up of harmonic partials that starts ‘with the fundamental and then [adds] the overtones necessary for achieving the desired timbre’ (Pejrolo and Metcalfe, 2017, p.175).

The additive synthesiser section of the synthesiser was procured using a tutorial by Pete Batchelor (MUST1002, 2017). Present are five harmonics for the two basic shapes oscillators. All of the harmonics are controlled by ‘slider’ user interface objects. Function to turn the additive synthesis on and off is present employing a live.text object, which reads ‘on’ or ‘off’, connected to a gate~ which prevents frequency from MIDI reaching any of the harmonics. Unlike the tutorial, the harmonics switch to the same waveform as the oscillator is set to. Each ascending harmonic has its inputted frequency multiplied to result in the harmonic series. Additionally, each harmonic is multiplied by the same attack, decay, sustain and release envelope as the main oscillators.

Drawable Wavetable

Wavetable Synthesis involves ‘creating sound by cycling arbitrary period waveforms’ (Hutchinson, 2020). This requires an oscillator to play back said waveform. In comparison to traditional basic shapes waveforms, with wavetables ‘the waves are far more complex… options are now expanded to a variety of raw sounds’ (Pejrolo and Metcalfe, 2017, p.26).

Wavetable synthesis is present in my synthesiser in the form of a drawable wavetable. To achieve this, I followed Aaron Myles Pereira’s tutorial (2022) and proceeded to integrate this drawable wavetable into the ‘poly~’ object. As in the tutorial, the Wavetable operates using the object wave~ which has the capability to read information from the object buffer~. Successive in the tutorial the object itable is used to draw the wavetable. The object ‘poke~’ is applied to the patch which sends the information from the ‘itable’ into the ‘buffer~’. This is achieved by specifying the name of the buffer in both objects (e.g., ‘buffer~ window’ and ‘poke~ window’). The ‘wave~’ object then picks up the buffer by also specifying the name (e.g., ‘wave~ window’). Inside poly~ the specified names in the objects include ‘#0_’ in front of them for the same reason it is put in front of sends inside ‘poly~’. The wavetable is multiplied by the same attack, decay, sustain and release envelope as the basic shapes synthesis.

FM Synthesis

FM Synthesis uses two oscillators: a modulator oscillator and a carrier oscillator. ‘The modulator oscillator modulates the frequency of the waveform generated by the carrier oscillator within the audio range, thus producing new harmonics’ (Apple, 2022, pp.750). These harmonics are referred to as sidebands.

To achieve FM synthesis in my synthesiser I employed methods detailed in a tutorial by Daniel Dehaan (2016). Like the tutorial, the modulator waveform has two different parameters controlled by live.dials. The first, ‘amount’, multiplies (‘*~) the incoming frequency from the MIDI note by a value between 0.-1. The second, ‘brightness’ multiplies the signal level of the modulator by a value between 0.-1. In addition, the modulator possesses its own attack, decay, sustain and release envelope. Differing from the tutorial, the choice of sine, saw, triangle or square waveform is available for the modulator. Another element I decided to incorporate was the ability to route the oscillator to individual oscillators.

Effects

The oscillators’ signal has the potential to flow through six different effects: compressor, EQ, overdrive, bitcrusher, reverb and delay. Each effect can be turned on or off using a live.toggle object connected to a selector~. The compressor appropriates the ‘komp’ object in Max which is a subpatch that includes parameters: threshold, ratio, attack, release, lookahead and output. I assembled live.dial objects to employ for each of these parameters for the user interface. For EQ, I adapted ‘Max EqParametric4’ from Max for Live Building Tools (Ableton, no date). This uses ‘cascade~’ as well as filtergraph to interface the type of EQ for each band and parameters with cascade~. The overdrive uses overdrive~ which interfaces with only one parameter, drive (1-11) and I employed a live.dial object to control this. In terms of the bitcrusher, I adopted the degrade~ object into my patch. This has parameters for sampling rate and word size.

The reverb in place is a Max external by Nathan Wolek (Cycling '74, no date) called nw.gverb~ (bfhurman, 2020). This object consists of a single decay parameter. There is a concern with reverb externals in visual programming languages as feedback loops become an issue if they are not coded properly (Sound Simulator, 2022). With this reverb, limiting the controls to one decay parameter negates this significantly. The delay uses tapin~ and tapout~, with tapout~ dictating delay time, as well as a feedback loop controlled by a live.dial. This is a simplified version of the delay taught in the University of Liverpool MUSI209 module taking away LFO controls as these are present in the synth itself.

LFO and Envelope Modulators

To encompass modulation the synthesiser incorporates four low frequency oscillators. I included four different options of LFO to choose from; sine wave (using cycle~), saw wave (using phasor~), triangle wave (using tri~), square wave (using rect~) and a random generator (using rand~). In terms of controls, I interfaced a live.dial to dictate the rate of the oscillator using frequency (0Hz-5Hz). Another live.dial is employed to communicate the depth of the LFO. This dial is simply multiplying the signal of the waveforms by 0.-1. Signal from the waveform is inputted into snapshot~ which transitions the data from an audio signal to data numbers. Successively, the data from snapshot~ is connected to a matrix with four inputs for the four different LFOs. The matrix object specifies seven different outputs to be connected to different parameter controls. Before the data reaches the parameters to modulate them, it is first inputted into data scaling, using the scale object, to ensure it modulates in the range of the parameter the LFO is connected to.

User Interface

Max/MSP objects can be added to the ‘presentation mode’. It is important to set the patch to ‘open in presentation mode’ in the inspector tab so as to indicate to the patch to be open to the user interface when opened in Ableton. The majority of aforementioned ‘live’ objects are deployed in the presentation mode of the synthesiser as user interface objects. In addition to this, I have used a few Max ‘slider’ objects. I also used the panel object to create back black ground panels with white borders to separate the different parts of the synthesiser. Finally, I used a black, white, and orange colour scheme for the synthesiser.

When planning the user interface in Ableton I decided to utilise a display with limited controls in the regular Ableton audio device style and include a button to open a pop-up display with all the controls. To achieve this, I needed to encapsulate all the objects, apart from midi objects and the plugout~ object that routes the audio out into Ableton, thus creating a subpatch. After this, I employed an object called pcontrol that allows the encapsulated patch to be opened or closed by sending a message box with the text ‘open’ or ‘close’. These message boxes did not correspond to the aesthetics of the user interface. Therefore, I adapted two live.text objects to read ‘open’ and ‘close’ and when pressed they send a bang message to the message boxes to open or close the subpatch.

A key part of my user interface was the use of inactive and active colours of objects to indicate if that object is currently active or inactive. This is demonstrated in the effects section which makes the live.dial objects orange and white when the effect is turned on and grey when the effect is off. The live.dial colours are dictated in the inspector section where you can change both the active and inactive colour of the dial and needle. In order to change the dial’s colour, a message box with the text ‘active’ followed by either a ‘0’ to set the live.dial’s colours to inactive, or a ‘1’ to set the live.dial’s colours to active needs to be actioned. These message boxes need a bang sent to them in order for them to affect the dial’s colour. It is the live.toggle that dictates if the effect is active and outputs either a 0 (effect off), or a 1 (effect on). This output is subsequently inputted to the object sel which, when specified as ‘sel 0 1’, creates an output for 0 and 1 and sends a bang when the inputted value matches it (e.g., when the input value matches 0 it will send a bang out of the output for 0). The output for 0 is connected to the message box ‘active 0’ and the output for 1 is connected to the message box ‘active 1’. These messages are then sent to the live.dials.

An interesting part of the user interface is the adaptive elements. An example of this is the LFOs and Envelopes section that changes to either display LFOs or Envelopes based on which is selected by the user interface object (live.tab). This works using scripts with messages to send objects behind the panel and in front of the panel (note the video demonstrating this) (Catalogue, 2013). Another important example of this can be seen when the user attempts to remove a limiter or clipper from the output. When the option ‘none’ from the menu is clicked a message appears warning that the synthesiser can get loud and gives the option to revert back to a limiter by pressing no. By pressing yes, the message simply disappears.

Another essential part of the interface are the automation capabilities within Ableton. With live user interface objects parameters are automatically mapped into Ableton’s automation list. In the inspector of the live user objects there is a short name and a long name. The short name is the name that would be shown on the interface object if it has that option (e.g., ‘live.tab’ does not). The long name is the name that will appear on the automation list in Ableton. To get the same setting in a Max user interface object the ‘Parameter Mode’ in the inspector tab needs to be enabled. In my device some objects are the same and control one another on the limited and extended display, therefore, I need to hide some interface objects from the automation list. This can be done in the inspector tab under the ‘Parameter Visibility’ and if this is set to hidden it will not appear in the automation list in Ableton.